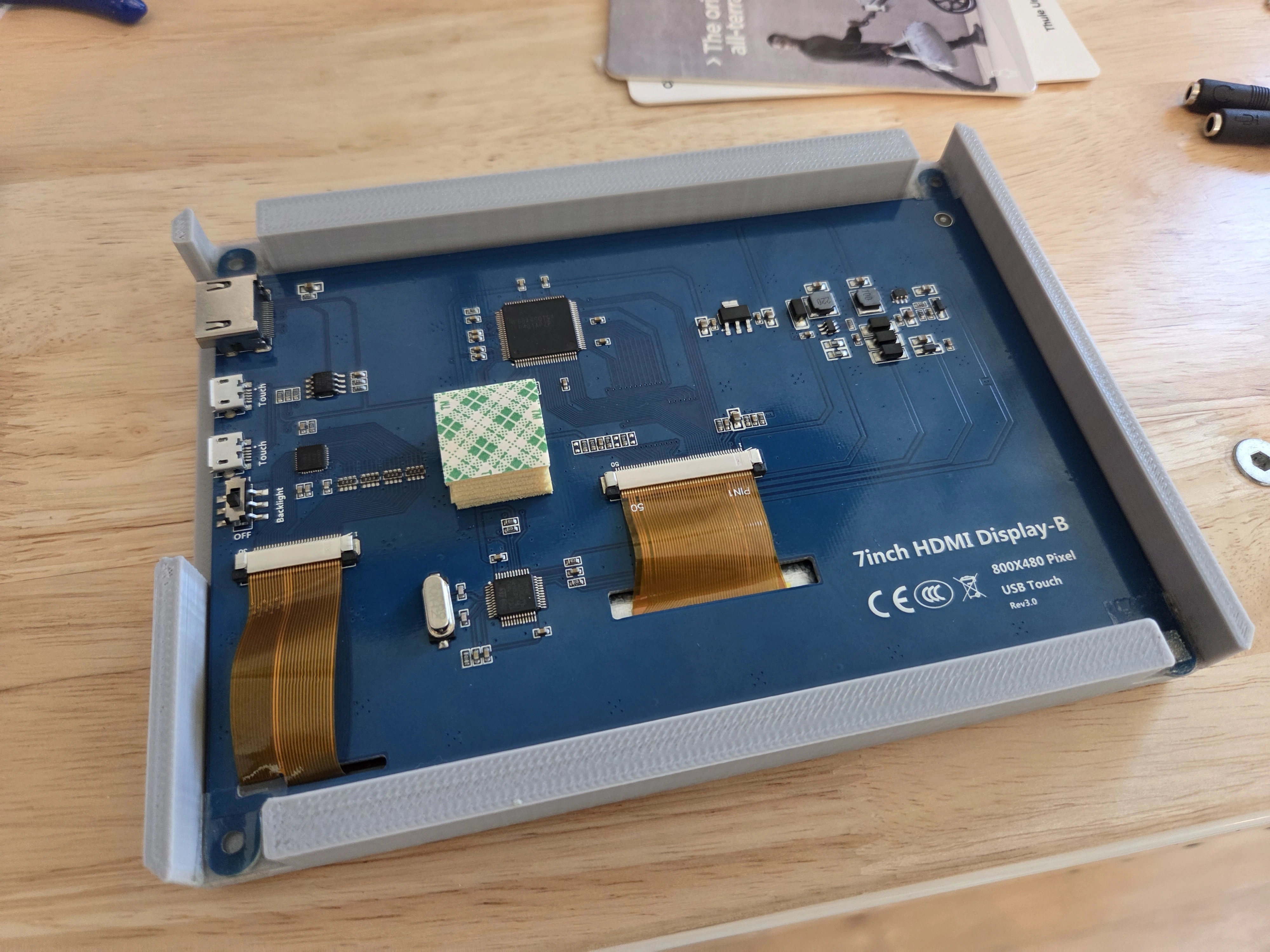

Dashboard Breakdowns

My home automation dashboard broke down in two ways this year. Hardware, then software. My wife and I really liked this dashboard. It saved us from pulling out our phones and getting sucked into emails or social media while we were supposed to be living our lives.

Both breakdowns ended up in rebuilds. The hardware obviously solved by 3D modeling and printing and the software part became another problem I would vibe code - no - vibe engineer - my way out of.

After rebuilding, it is totally different. But cooler perhaps? And everything it uses should last many decades this time, instead of just one decade.

December 14, 2025 · 9 minutes · Read more →

The Real GPT-5 Was The Friends We Made Along The Way

Warning: This article has a lot of embedded code, so the ball machine is slow unless you have a REALLY fast computer. Play at your own risk.

Seriously, though.

GPT-5, as a simple text completion model, is not a revelation.

This isn’t so surprising. It was becoming clearer with every new raw LLM release that the fundamental improvements from scaling solely the performance of the core text predictor were starting to show diminishing returns. But I’m going to make an argument today that, although the LLM itself is not nearly as much of a leap from GPT-4 as GPT-4 was from GPT-3, we have still seen at least a whole-version-number of real improvement between the release of GPT-4 and 5 as we did between 3 and 4. The reasons for that are mostly what exists around that LLM core.

August 24, 2025 · 9 minutes · Read more →

On AI Software Development, Vibe Coding Edition

Recently I read the AI 2027 paper. I was surprised to see Scott Alexander’s name on this paper, and I was doubly surprised to see him do his first face reveal podcast about it with Dwarkesh.

On its face, this is one of the most aggressive predictions for when we will have AGI (at least the new definition of AGI, which is something that is comparable to or better than humans at all non-bodily tasks) that I have read. Even as someone who has been a long believer in Ray Kurzweil’s Singularity predictions, 2027 strikes me as very early. I realize that Kurzweil’s AGI date was also late 2020s, which puts his prediction in line with AI 2027, while 2045 was his singularity prediction. But 2027 still feels early to me.

August 1, 2025 · 21 minutes · Read more →

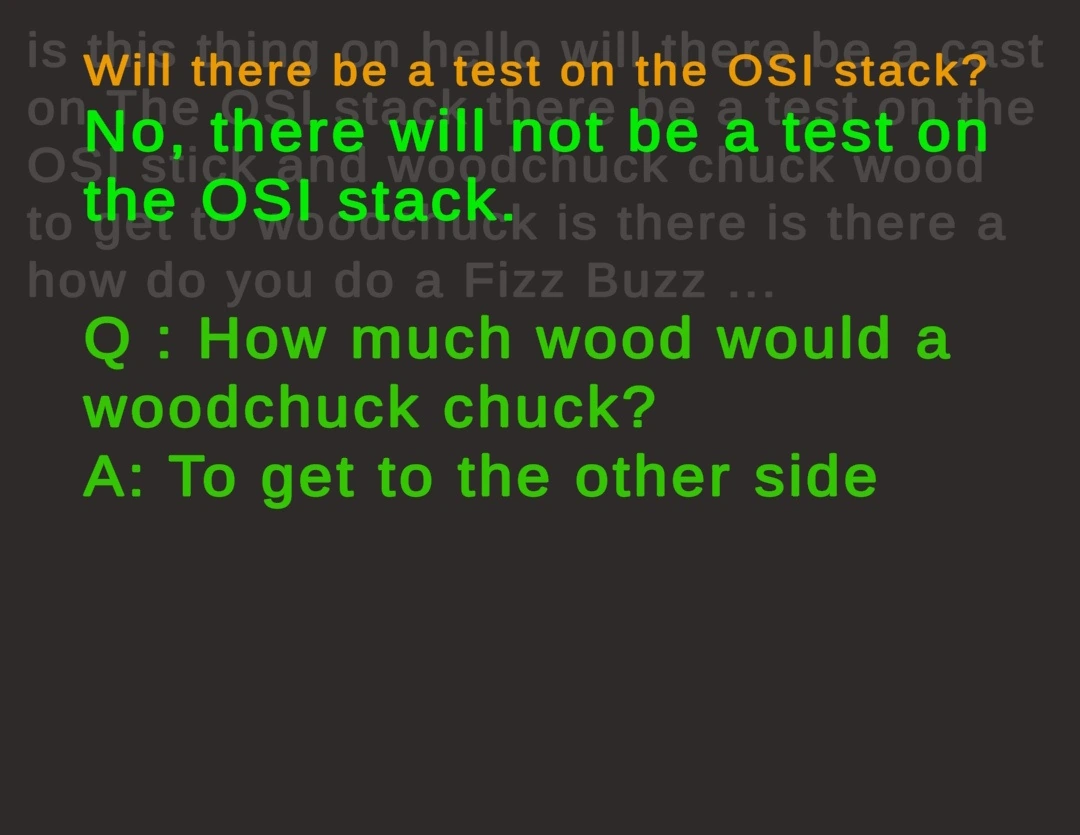

My Experiments with AI Cheating

The advent of general coding AI assistants almost immediately changed how I think about hiring and interviews.

In the software engineering world, this mindset shift was psychologically easy for me, because I’ve always had a bias against the types of coding questions that AI can now answer near-perfectly. And they also happen to be the kind of questions I personally do badly at - the ones requiring troves of knowledge or rote memory of specific language capabilities, libraries, and syntax. It is not so psychologically easy for everyone, especially those who have developed a core skill set of running or passing “leetcode-style” interviews. Even before AI, the only types of coding questions I would personally ask were things that simply evaluate whether a candidate is lying or not about whether they can code at all, which was and still is surprisingly common. I have interviewed people that list bullet points like 7 years of Java experience but can’t pass a fizz-buzz like question, and this was a question I gave out on paper with a closed door and no significant time pressure.

June 29, 2025 · 6 minutes · Read more →

Context Caddy

I built a nice little tool to help AI write code for you.

February 13, 2025 · 1 minute · Read more →

Some Custom GPTs

Custom GPTs are free for everyone as of yesterday, so I thought I’d post some of the best ones I’ve made over the last few months for all of you:

Proofreader (https://chatgpt.com/g/g-hjaNCJ8PU-proofreader): This one is super simple. Give it what you’ve written and it will provide no-BS proofreads. It’s not going to hallucinate content, just point out mistakes and parts that don’t make sense.

Make Real (https://chatgpt.com/g/g-Hw8qvqqey-make-real): This makes your napkin drawings into working websites. It’s got some of the same limitations other code-generating AI tools do, but it does a surprisingly good job creating simple working web frontends for your ideas!

June 1, 2024 · 1 minute · Read more →

On AI Software Development

Lots of chatter right now about AI replacing software developers.

I agree - AI will take over software development. The question is: what work will be left when this happens?

January 24, 2024 · 2 minutes · Read more →

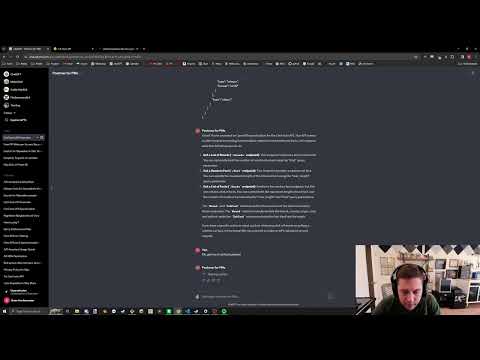

Postman for PMs

I made “Postman for PMs,” a tool to help non-engineers understand and use APIs!

January 13, 2024 · 1 minute · Read more →

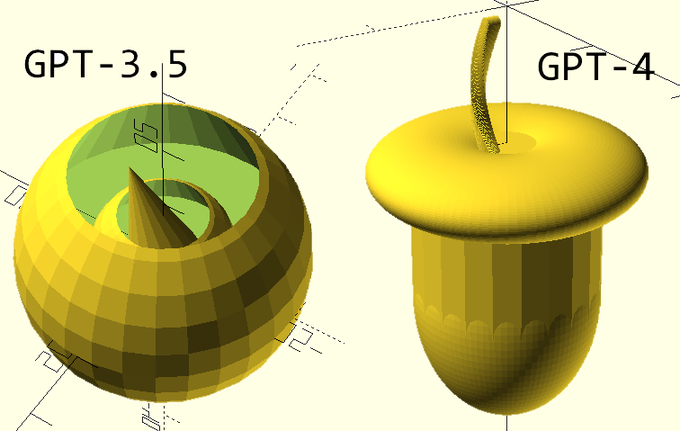

3D Modeling With AI

I have been occasionally challenging GPT to create models using OpenSCAD, a “programming language for 3D models”

Both struggle, but GPT-4 has been a massive improvement. Here are both models’ outputs after asking for an acorn and 3 messages of me giving feedback:

For the record, it is impressive that these LLMs can get anything right with no visual input or training on shapes like these. Imagine looking at the programming reference for openSCAD and trying to do this blind. The fact that the 3.5 version has a bunch of strangely intersecting primitives and some union issues has been normal in my experience. It takes quite a bit of spatial logic to get a model not to look like that.

March 19, 2023 · 1 minute · Read more →

GPT-4 Solar System

I’m writing this post retrospectively as I never published it at the time of creation. It will live here as a “stake in the ground” of AI software capabilities as of March 2023. Note- if you’re reading on substack, this post won’t work. Go to hockenworks.com/gpt-4-solar-system.

The interactive solar system below was created with minimal help from me, by the very first version of GPT-4, before even function calling was a feature. It was the first of an ongoing series of experiments to see what frontier models could do by themselves - and I’m posting it here because it was the earliest example I saved.

Here’s a link to the chat where it was created, though it’s not possible to continue this conversation directly since the model involved has long since been deprecated: https://chatgpt.com/share/683b5680-8ac8-8006-9493-37add8749387

March 18, 2023 · 3 minutes · Read more →

200

200